Naming this post took waaaayyyy longer than it should have. This is really a post about using the Azure CLI and PowerShell to create and configure a single Azure resource group to enable common Office 365 development scenarios. Phew, that's a lot... let's unpack this a bit and discuss the scripts. Maybe the title should be "Using Azure CLI and PowerShell Az Cmdlets to enable common Office 365 Solutions". Yea, that's not gonna work either. If you have a better title, leave me a comment, I am all ears!

TL;DR;

If you are an Office 365 developer and frequently need to deploy common Azure resources to prototype or develop solutions then these scripts might be for you! Check out the Github repository for all of the scripts and leave or comment or create an issue if you run into problems. I help if I can.

For many development scenarios in Office 365 development an Azure AD application needs to be registered, Azure resources provisioned, and typical boiler plate setup done just to get started. In my day job I tend to need to prototype or test things to understand and assist in technology design and decisions for Office 365 integrations. Setting all of this up can be a bit cumbersome and time consuming. I needed (wanted) a way to deploy all some of the common Azure assets I use to prototype things like Azure AD applications, Azure Storage, Functions, App Insights, Automation Services, and more to enable me to prototype things like Azure Functions for Flows, provisioning solutions for Teams, Planner and OneNote with MS Graph, SPFx web parts that consume Functions as API's, PowerShell scripts that deploy PnP templates, and much, much more.

In this Post

This post is about using the Azure CLI and the PowerShell Az Cmdlets to produce local and Azure based resources for a common Office 365 development and prototyping scenarios. The script was developed on macOS, even the PowerShell portions, but should all be cross platform capable. Rather than explain each script, which you can review in the Github repo, let's review some things that are very helpful in creating and consuming these scripts.

- Tip 1 : Self-Destructing Azure Resource Groups

- Tip 2 : Get to know 'jq'

- Tip 3 : Invoking Powershell from Shell Scripts on macOS

- The Scripts - An Overview

- Why not an ARM template?

Tip 1: Self-Destructing Azure Resource Groups

Since I often try to squeeze in coding time, I get pulled away or have limited time at night to code. This means if I get distracted, or simply head to bed I could have resources provisioned and costing me money. To ensure that I don't use all of my Azure credits, or worse yet spend $$ needlessly on a paid Azure Subscription, I use Noel Bundick's Self-Destruct Azure CLI extension.

The best way to sum this up is from Noel's tweet!

You ever wish you could create something in Azure and have it automatically go away in X hours or days? I give you self-destruct mode!

— Noel Bundick (@acanthamoeba) June 7, 2018

`az group create -n foo -l eastus --self-destruct 2h`

Great for demos and playing aroundhttps://t.co/MlyQoYDlwD

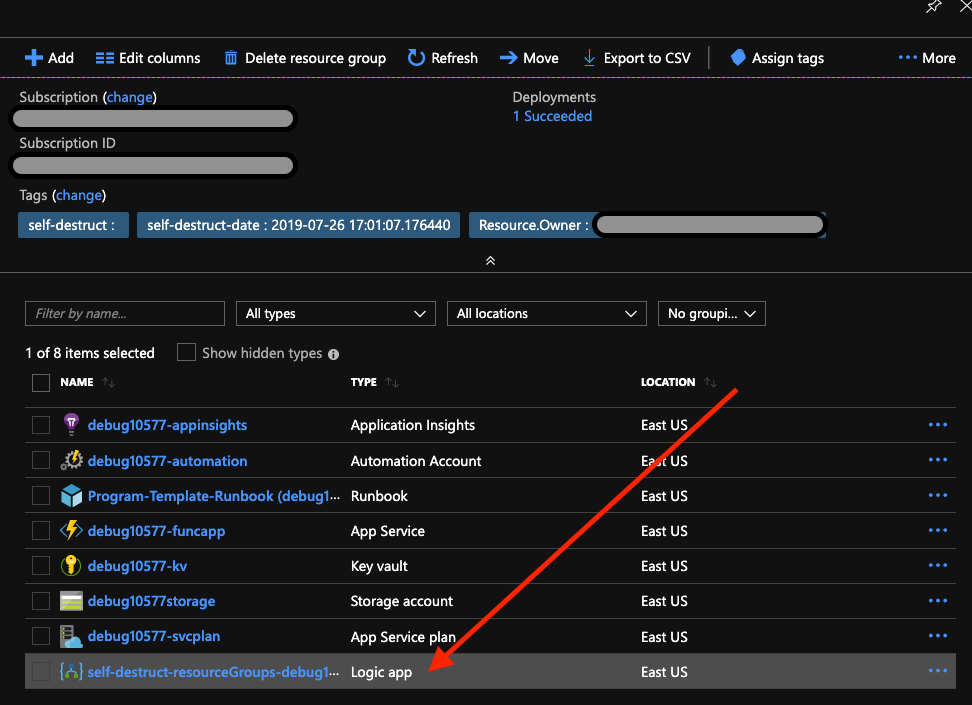

When you install this extension and add the parameter to the creation of a resource it creates a LogicApp which has two (2) activities that call Azure Management API's (using HTTP Activity) to delete desired resources. To ensure I spend as little $ as possible, I create all resources in a single Resource Group and then enable the Self-Destruct for that resource group. Since this deletes the entire resource group, I only pay for the time I am developing. Perfect! I can always rebuild the entire development environment in a few minutes via the scripts.

Tip 2: Get to Know 'jq'

I have mentioned using jq in other posts and this is a must have tool if you are trying to write scripts with things like the Azure CLI or the Office 365 CLI. Most CLI tools provide output options and choosing json output means parsing the output is simple, once you spend a little time on the jq documentation page. For the record, I agree that PowerShell makes this kind of stuff SO much easier!

Using jq to read configuration from a file

The following snippet shows loading the configuration values from a file specified on the command line similar to ./create-resources.sh ../config/dev.settings.json. The file is read in with cat and then each value can be read from the file using jq.

# Read configuration file content

CONFIG=$(cat "$1")

DEBUG_OUTPUT="none"

# Set all configuration values

CONFIGURATION=$(echo $CONFIG | jq -r '.Configuration')

SELF_DESTRUCT_TIMER=$(echo $CONFIG | jq -r '.SelfDestructTimer')

APP_TEMP="$CONFIGURATION$RANDOM"

APP_NAME=${APP_TEMP,,}

APP_SVC_SUFFIX=$(echo $CONFIG | jq -r '.AppServicePlan')

APP_INSIGHTS_SUFFIX=$(echo $CONFIG | jq -r '.AppInsightsSuffix')

RESOURCE_GRP_SUFFIX=$(echo $CONFIG | jq -r '.ResourceGroupSuffix')

KEYVAULT_SUFFIX=$(echo $CONFIG | jq -r '.KeyVaultSuffix')

RESOURCE_LOCATION=$(echo $CONFIG | jq -r '.ResourceLocation')

Using jq to parse command output

Once the configuration is loaded, I can check things like dependencies using jq. To make sure the Self-Destruct exension is installed, I can parse the Azure CLI output like below and stop the script as needed.

EXT_INSTALLED=$(az extension list --output json | jq '.[]| select(.name == "noelbundick") | .version')

Using jq also enables you to parse output for use in other commands. Part of the overall script creates Azure AD Applications, and so I need to retreive Application ID's for use in other calls. This can be done like so:

# Retrieve the clientId for the Azure Function App post creation

funcAppClientId=$(az ad app list --output json | jq -r --arg appname "${APP_NAME}${FUNC_APP_SUFFIX}" '.[]| select(.displayName==$appname) |.appId')

Use jq to output configuration

Parsing output from CLI commands is useful, but jq can also output configuration to be read again. For my purposes, I wanted to output most of the confirguration information from a shell script to a json file in order to read it into a PowerShell script (btw - PowerShell on a macOS is just a wonderful thing!). This is a very useful capability.

For example, many Office 365 sceanrios benefit from an Azure Automation account. Unfortunately the Azure CLI does not have any commands to create Azure Auomtation accounts, but PowerShell does. Sad face, I am on a Mac! But wait, PowerShell to the rescuce! Using jq to output the configuration information from a shell script I can call a PowerShell script from my main shell script and provision an Automation Account using the PowerShell Az cmdlets - awesome!

# Redirect a json object as the configuration for PowerShell to configure the Automation Account

configJson=$(jq -n \

--arg tenantId "$tenantId" \

--arg subId ${subscriptionId} \

--arg appName "${APP_NAME}" \

--arg funcName "${APP_NAME}${FUNC_APP_SUFFIX}" \

--arg resGrpName "${APP_NAME}${RESOURCE_GRP_SUFFIX}" \

--arg resGrpLoc "$RESOURCE_LOCATION" \

--arg storeAcct "${APP_NAME}${STORAGE_SUFFIX}" \

--arg storeKey "${storageAccountKey}" \

--arg progReqQueue "$PROGRAM_REQUEST_QUEUE" \

--arg autoAcctName "${APP_NAME}${AUTOMATION_ACCOUNT_SUFFIX}" \

--arg progBookName "$programRunbookName" \

--arg progBookPath "$programRunbookFilePath" \

--arg progWebhookName "$programWebhookName" \

--arg provAttempts "$PROVISIONING_ATTEMPTS" \

--arg funcAppId "$funcAppClientId" \

--arg funcAppSecret "$funcAppClientSecret" \

--arg flowAppId "$flowAppClientId" \

--arg flowAppSecret "$flowAppClientSecret" \

--arg spUrl "${SHAREPOINT_TENANT_NAME}" \

'{appName: $appName, funcAppName: $funcName, tenantId: $tenantId, subscriptionId: $subId, resourceGroupName: $resGrpName, resourceGroupLocation: $resGrpLoc, automationAccountName: $autoAcctName, programRunbookName: $progBookName, programRunbookFilePath: $progBookPath, programWebhookName: $progWebhookName, storageAccountName: $storeAcct, storageAccountKey: $storeKey, programsQueue: $progReqQueue, provisioningAttempts: $provAttempts, funcAppClientId: $funcAppId, funcAppClientSecret: $funcAppSecret, flowAppClientId: $flowAppId, flowAppClientSecret: $flowAppSecret, sharepointUrl: $spUrl}')

cd $__dir

echo $configJson > $__root/config/automation-config.json

Tip 3 : Invoking PowerShell from Shell Scripts on macOS

I have been developing for Windows for more than 20 years, but sometimes a macOS issue trips me up for a bit. One tricky bit here for an old dog like me is using the right #hashbang to execute the script on include. The following is the correct way to enable a PowerShell script on macOS. See this StackOverflow correct answer for great explanation. Now calling a PowerShell script from a shell script is a standard dot source include.

#!/usr/local/bin/pwsh -File

[CmdletBinding()]

param(

[Parameter(Mandatory = $true)]

[string] $configFile

)

#... remainder of script

This enables you to invoke the PowerShell script (PowerShell 6.0 which is now xplat) using somthing like the followig from a shell script:

# now call the create-automation-account.ps1 script

./create-automation-account.ps1 $__root/config/automation-config.json

rm $__root/config/automation-config.json

Very useful since the Azure CLI has no way of creating an Azure Automtion Account but the PowerShell Az cmdlets enable this very simply.

Use the New Az Module in Azure Automation

This was something that was on my list to learn and integrating this into the script for the Automation Account was relatively simple. When configuring the Automation Account, we are installing modules and their dependencies. This was a little tricky, just finding the right status for the installation of the modules, but the install has been working great ever since. Keep in mind you may need to load more modules and their dependencies for your solution.

The Script Overview

When I am prototyping or testing functionality in Office 365 development scenarios, there seem to be some consistent resources and configurations that are needed. The preceding tips and explanation were all things learned int he process of building the scripts, but the overall script enables jump starting scenarios like creating a Flow that calls an Azure AD secured Azure Function, SPFx web parts that call Azure Automation webhooks, a PowerApp that stores data in Azure Table Storage, and many more! I found myself using the Azure CLI a lot to do these things and thought putting them into a single script would save significant time.

The main script performs the following:

- Reads a json configuration for Azure Subscription, O365 tenant info, and more

- Checks for prerequisite tools (jq, Azure CLI, Functions Core Tools, Office 365 and more)

- Creates a resource group for all provisioned resources

- Enables self-destruct on the resource group (for configurable duration)

- Creates a storage account

- Creates a storage queue in the storage account

- Creates an App Service Plan for an Azure Functions app service

- Creates an App Insights plan for the app service

- Creates a Function app in the App Service Plan with AppInsights and key

- Registers an Azure AD Application to be used for an Azure Function

- Assigns App Roles to the Function Application

- Grants consent to the Function AD Application (to access assigned resources)

- Configures the Function App settings to enable code access to tenant and AD app

- Registers an Azure AD Application for use from Flows (enables calling into Azure Function from Flow)

- Creates a KeyVault and sample secret values (use from any Azure resource or code)

- Cleans a dist folder (typically used for the Function App zip deploy

- Builds and Zip Deploys Azure Function App (path provided in config)

- Calls PowerShell script to provision Azure Automation Account and Runbook

- Retrevies all Function App settings to enable running Functions locally

Why Not an Arm Template?

I have worked with ARM templates before, but my goal was to dig a bit deeper into the Azure CLI and Az PowerShell Cmdlets. ARM templates are awesome, but I wanted a script to enable me to build an entire development environment (I use Azure DevOps Pipelines for CI/CD for the project as well) and take advantage of some other functionality the Azure CLI provides, specifically the Self-Destruct extension. In addition, getting some of the keys and secrets out of ARM templates can be challenging still.

This was more about saving some $$, learning some new techniques, and enabling common prototype and development scenarios - not really the most efficient solution, but very informative and I learned alot! One way to simplify this might be to use a development resource group in Azure and simply us the func azure functionapp fetch-app-settings to bring the configuration local each time, but this would mean keeping the storage, compute and other resources active. I guess I am just cheap.

The Scripts

As mentioned above the scripts can be found in Github in the pkskelly\o365-azuredevenv repository if you is interested. The main script, create-devenv.sh should be reusable once you review the steps required in the Readme.md Your mileage may vary, and you may want or need to modify the scripts to suit your needs. Happy to help if I can, just leave me a comment below or log an issue in Github.

These scripts let me develop and prototype locally quickly, and save money by deleting the resources when I am not using them. I have also found that combining some of the Azure CLI commands or Az cmdlets in an Azure DevOps pipeline helps reduce the friction for the development and CI/CD processes of all sorts of Office 365 solutions.

Final Thoughts

These scripts have actually been fun to write, and they continue to evolve. These might be useful to others in some way so I thought I would share them. I'd love to make these a bit more robust and have others get some benefit from them so open to any suggestions (no promises on implementing).

HTH - Tweet or leave a comment and let me know if this is helpful. #SharingIsCaring